|

I love working with graduate students because I almost always learn something while I’m helping them. One of the most common topics that students come to me with is regression analysis. You know, that scary statistical technique for finding the equation of a line. Well, it’s not all that scary and there’s lots of fun things you can do with it. But students always have the sense that there is something more to it. Believe me there’s not. If you’ve taken an even moderately rigorous course in graduate level applied stats you know regression. Let’s get into this. This came up in a discussion recently with one of my students. First a little background. Years ago, I interviewed for a job with an elite research institute. I didn’t know it was elite when I interviewed because if I had known that I would have had the terrible jitters. The man who interviewed me was a world-class applied statistician who had a reputation for striking fear into the hearts of even the executive director of the institute. I didn’t know that either at the time I interviewed. He asked me, “What do you think of regression?” What an odd question. My answer was equally odd. I said, “It’s a good excuse for bad research.” And by the way, I got the job.. My answer was the right one then and it is still the right one now. So, what is regression anyway. It is simply a method for finding the equation of a line through a given set of points. We all remember (or maybe you have repressed it) the equation of a line from high school algebra: y = ax+ b. Well, this is great. If we know the coordinates of any two points on a plane, we can determine the equation of the line between those two points. But what happens when we have a gazzilion points that don’t all lie on the same line such as in the figure below. Regression allows us to find the straight line of best fit for all of those data points. That is all there is to it. It uses a criterion called least squares which ensures that the sum of the distance between the given line and all the data points is the smallest possible. This means that the line is as close as possible to all the data points. Why is it called least squares? Because before we add all of the distances between the line and points in the set, we square them so that when we add them up, they don’t sum to zero. Multiple regression is just an extension of simple regression to more than one independent variable. Why then is regression a good excuse for bad research? To do a proper analysis with regression you develop an a priori hypothesis that certain independent variables will have a significant relationship to the dependent variable. Those variables get included in your analysis. What happens all too often in practice is that researchers will collect a whole bunch of data and try different combinations of one or ones that give them the best results. This is a big no, no and can lead you down the primrose path. Here’s an example. Shortly after taking the job at the research institute mentioned above, we were asked to take a look at a test that the institute had developed to help screen job applicants. The client said it wasn’t working. Sure enough, when we analyzed the new data there was no relationship between the predictors (scores on the test) and subsequent job performance. When we investigated a little further, we learned that the team that had done the original research analyzed the heck out of the data until they found something that seemed to work. The client had spent a lot of money on developing this test and they felt they needed to find something that worked. They had inadvertently handed the client an empty bag! The problem of course with analyzing the heck out of a set of data is that if you have enough time and enough variables you are bound to find some significant results. That’s what we call run on alpha! Don’t do it. You will get significant differences or relationships 5% of the time if you set alpha at .05. What did my student learn? What did I learn while working with her? Tune in next month and you’ll find out.

0 Comments

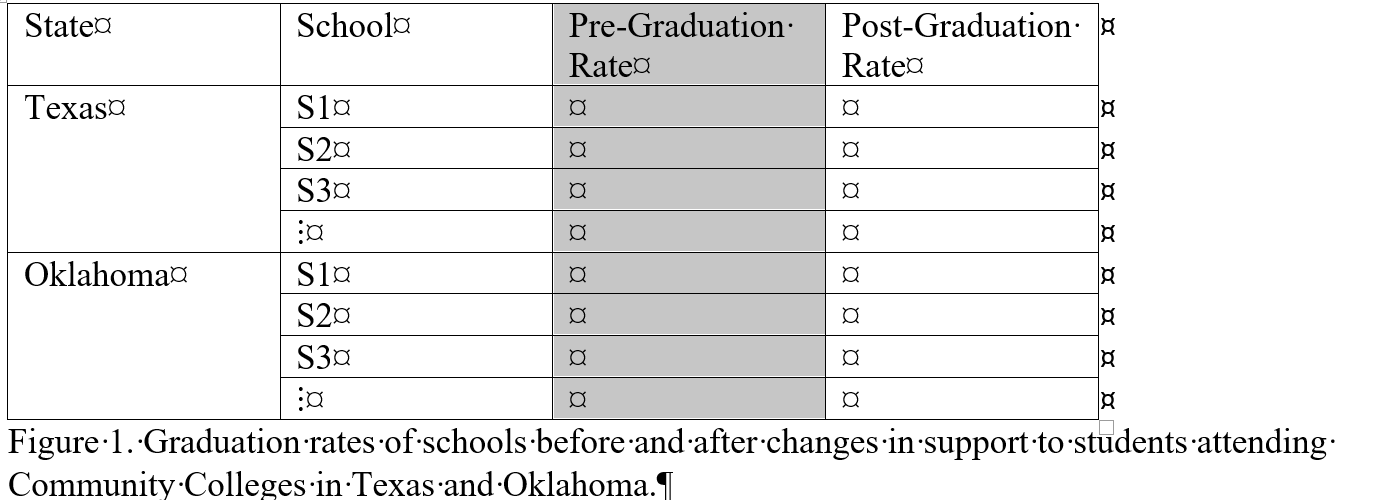

The above quote and title of this missive is often attributed to Benjamin Disraeli by way of Mark Twain. It seems to imply that statistics anchor the bottom of the untruth continuum. We can all recall instances of people misusing numbers or outright lying with numbers but in trusty hands statistics should help us understand phenomena more clearly. If I tell you that a man doing a certain job makes a lot more than a woman doing the same job you may be vaguely interested. If I show you their pay stubs and one earns $2000 in a two week pay period and the other earns $3000 in the same period now I may have piqued your interest. But it may be more nuanced than that. The one with the $2000 check may be working part time while the other person may be working full time. How many hours? Is there a difference in grade level? If so, what does that imply? Did I specify who got the larger check? Was it the man or the woman? Sometimes everything you say can be true but what you leave out makes it misleading or untrue. The most recent case of this “unholy” practice was called to my attention by a friend I was helping with her graduate statistics course. Her assignment was to find an example in the literature of a two-sample t-test. You remember the t-test? We are not comparing chamomile with say Darjeeling. No, we are talking about the statistical procedure where we are comparing two sets of measurements to try and figure out if they are different on some measure of interest. For example, as in the case of the study my student found, the issue was if there was a difference in graduation rates at as a function of new support programs at Community Colleges in Texas and Oklahoma. They used a two-sample t-test to test to see the differences between the two states was statistically significant. Sounds pretty reasonable on the face of it. But, as my tutee astutely detected, there were some major flaws in the procedure. To begin with, they threw out all but fourteen of the schools from Oklahoma because ethnicity data was not included in the data sets and then they proceeded to use a t-test using just 14 schools from Oklahoma even though ethnicity had nothing to do with that part of the analysis. If they didn’t need the ethnicity data, they could have retained all of the Oklahoma schools for their analysis giving them more power and a more representative sample. Their analysis should never have been published. The proper design would have incorporated pre and post data and the Texas vs Oklahoma variable in a classic Split Plot design as in Figure 1 above. While ethnicity is no doubt an important variable, they did not have sufficient data to include it in their analysis. The article was rife with statistical errors. Be aware that publication does not mean an article is free from either error or artifice. Read every article carefully and look for faulty statistics and reasoning. Whether the errors were committed intentionally or through ineptness an inappropriate use of statistics can lead to erroneous conclusions and sometimes lead to action in a wrong direction. Another recent example of lying with statistic comes from the Mayoral race in Tampa, Florida. It had been alleged that the former Police Chief, then running for mayor, claimed a 70% decrease in the crime rate under her watch as Chief. It has been alleged that she was counting crimes differently under her watch. Instead of counting all of the charges related to an incident as independent crimes, as had always been done before, Chief Castor was allegedly only counting the incident as a single crime, thereby cutting the number of apparent crimes drastically. So, when she reported cutting the crime rate, she was using a different metric which resulted in the appearance of a lower crime rate. Whether she did this wittingly or unwittingly (or at all, as the matter is very unclear at this writing) again we see the inappropriate use of statistics leading to a major misimpression of reality. Applying another old adage,” If it sounds too good to be true it probably isn’t true”, the 70% crime decrease sounds too good to be true. In either of the cases cited above it is unfortunate that there was no one sufficiently versed in statistics to properly review the information before it became public. A proper vetting could have been beneficial in both cases. I didn’t start out wanting to write a blog. But the space was here on my new web site so I thought I would do something fun and interesting with it. Fun and interesting and statistics! Aren’t those terms oxymorons! We’ll see.

For example, I wrote a brief article years ago when Jesse “The Body” Ventura was elected Governor of Minnesota. The election pollsters completely missed Jesse as a factor in the election let alone did they find him to be a challenger. The reason of course was that the pollsters were using old polling technology – phone calls. Well by that time younger voters were already exclusively using cell phones and the pollsters didn’t have access to those phones. So, they completely missed the young demographic in their polling and it was that demographic that went for “The Body”. The pollsters are doing a better job these days but they are still lacking accuracy. And still that lack of accuracy is because it so hard to get a truly random and representative sample. Sorry, I got a little technical there but that’s the nub of it. So, going forward I will try to address topics that are of general interest and I hope that they will be engaging and fun – and if they are not you’ll let me know. |

AuthorEd Siegel Archives

May 2023

Categories |

RSS Feed

RSS Feed